If you’ve spent any time keeping tabs on AI and Machine Learning, you know that people are throwing around terminology and definitions however they want.

What is actually “AI”? What is a prompt? What does it mean to “train” or “fine-tune” a model?

What is an “Agent”?

The list goes on. You and I shouldn’t care too much about getting the definitions right.

What you should care about is using the models and tools available in the context of your business.

Let me explain.

1. Reasoning Models Primer

For Reasoning Models, we’re talking about models that use a process of answering questions that require complex, multi-step generation with intermediate steps.

For example, asking a factual question (such as “What is the capital of the USA?”) does not involve complex reasoning or multiple steps.

But, if you ask a question like:

“If our 7-day onboarding email sequence is converting new subscribers into leads for our sales team at 10%, what can we do to increase the conversion rate and shorten the time from new subscriber to warm lead?”

This requires access to context (data, emails) and for the model to “reason” its way through toward several recommendations for what you could do. It will also take the model a few minutes, sometimes longer, to answer you. This is a good thing.

When do we need a Reasoning Model? Reasoning models are designed to be good at complex tasks such as solving puzzles, advanced math problems, and challenging coding tasks.

However, they are not necessary for simpler tasks like summarization, translation, or knowledge-based question answering.

In fact, using reasoning models for everything can be inefficient and expensive. Reasoning Models are typically more expensive to use, more verbose, and sometimes more prone to errors due to “overthinking.”

Do you see the difference between a simple prompt and a prompt for a Reasoning Model? You could ask simple, factual questions but you’d be wasting the potential of the model.

That means you don’t prompt a Reasoning Model the same way you’d prompt GPT 5.2 or Claude 4.5 Opus, or any other “regular” LLM.

So, for use in your business, the models you’ll want to experiment with are:

- o1, o3, or more recent GPT 5.2 (from OpenAI)

- Claude 4.5 Opus or Sonnet with Thinking mode (from Anthropic)

- Grok 4 with Think mode (from xAI)

- R1 (from DeepSeek)

There are many more smaller and less capable Reasoning Models—and more coming. I think we’ll see specialized models for various categories (medical research, math and logic, engineering, etc.)

But if you understand how they work and how to use them, any new models will only make your use of them better.

You can access these either via ChatGPT.com, Claude.ai, Grok.com, or using a model aggregate tool like Poe.com (access all models in one interface).

Now, most regular LLMs are capable of basic reasoning and can answer questions like, “If a train is moving at 60 mph and travels for 3 hours, how far does it go?”

You don’t necessarily need o1, o3, or 5.2 to answer that question. But ordinary LLMs have limitations on how far and “deep” they go into something that looks like reasoning.

Reasoning Models excel at more complex reasoning tasks, such as solving puzzles, riddles, problems, and mathematical proofs. They can process inputs and generate likely true answers, as well as interpret and infer meaning from complex sets of information.

In the context of your business, reasoning—the ability to draw informed conclusions and make decisions based on given information—is pretty useful for understanding complex situations and problem-solving.

As you probably already know, humans use different kinds of reasoning when we’re thinking things through. If you’re trying to solve a math problem, you’re using mathematical reasoning and logic. You’re not using social reasoning.

Makes sense, right?

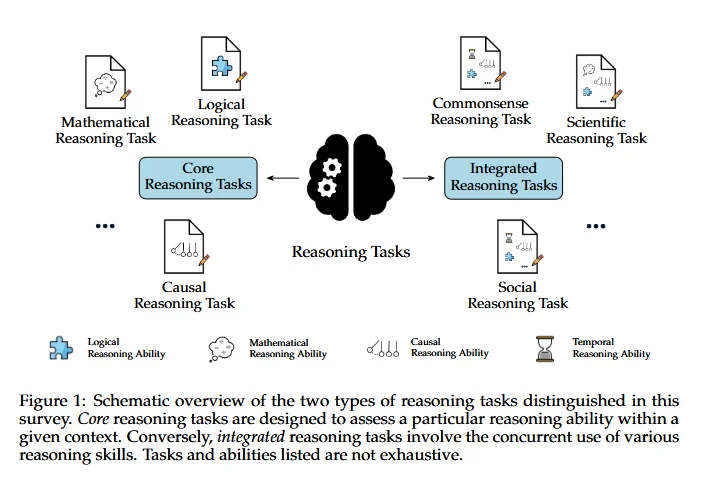

Reasoning Models do something similar:

They use different kinds of reasoning for different kinds of problems or tasks. You can classify types of reasoning in several ways but the above graphic is one helpful way to understand it.

Another visual to help you better see and understand what’s going on with these models:

You, as a human, engage in these modes of reasoning and thinking all the time (whether you’re aware of it or not).

Let’s look a little closer at a few reasoning capabilities that matter for your business.

Frankly, if you understand this and practice these skills on your own, using your own brain, you’ll have an advantage over other entrepreneurs who never use thinking as a strategic tool.

2. Reasoning Capabilities To Use In Your Business

To basically “install” reasoning in LLMS, researchers focus on foundational skills that allow these models to navigate complex information and respond in ways that resemble human cognitive processes.

Reasoning in LLMs involves synthesizing insights, applying learned knowledge to new contexts, and handling complex prompts. To do this, you need different kinds of reasoning capabilities.

Here are some key capabilities that enhance an LLM’s reasoning abilities—and you can use them yourself, just you and your brain.

Inference

Here’s a good definition:

“Inference in LLMs involves deriving new insights or knowledge from the information at hand. Unlike basic retrieval or recall, inference allows the model to recognize relationships, make informed assumptions, and bridge information gaps to reach well-founded conclusions.”

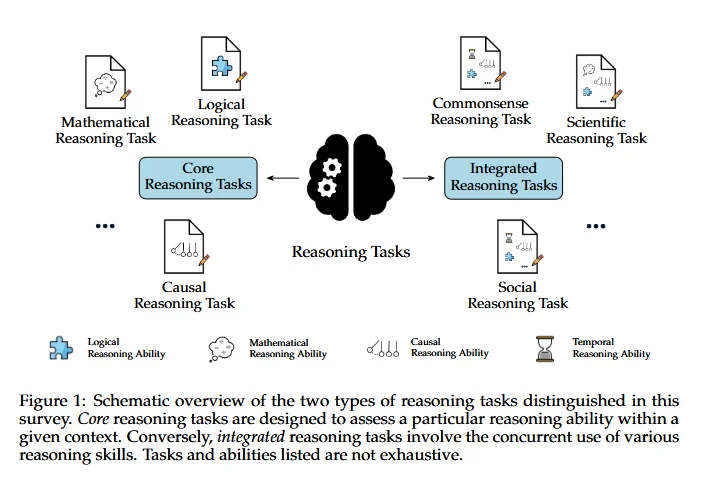

Inference looks something like this:

Source: Natural Language Reasoning, A Survey

For example, given the statement ‘All dogs can bark, and this animal is a dog,’ an LLM with strong inferential reasoning skills would deduce that ‘this animal can bark’.

Problem-Solving

Problem-solving involves a process of analyzing and responding to challenges by identifying optimal solutions based on the input data.

And when a Reasoning LLM is given complex queries containing unclear or conflicting details, it adapts by considering alternative interpretations and adjusting the response.

In other words, it tries to solve a problem much in the same way a human would. Just like you and I are adaptable, these models can be too.

And that matters for tasks that don’t have any straightforward answers, as it requires the model to weigh pros and cons or integrate broader context to get to a coherent solution.

Effective problem-solving also requires the model to adapt based on outcomes from prior interactions, refining its approach iteratively. A Reasoning Model can do this.

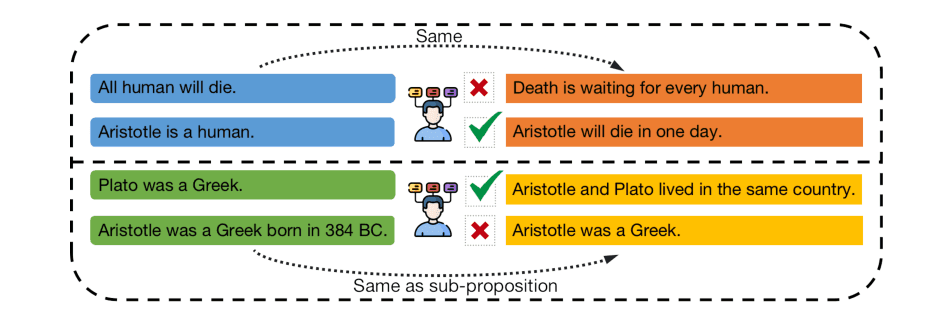

The Tree-of-Thought strategy is often used to solve complex problems:

Source: Large Language Model Guided Tree-of-Thought

Understanding Context

As a human, if you’re tasked with solving a problem, you need relevant context to do so. For example, if you’re asked to answer the question:

“If our 7-day onboarding email sequence is converting new subscribers into leads for our sales team at 10%, what can we do to increase the conversion rate and shorten the time from new subscriber to warm lead?”

What do you need to answer this? You need context like email metrics and performance data, the emails themselves, profiles of your new subscribers, profiles of warm leads, and sales conversation data.

A Reasoning Model would need the same context so it can reason its way to an answer.

By the way, this is why a lot of prompts I see people use are terrible. They assume the model knows everything about everything, and that what it knows is accurate. But these models don’t “know” everything—and they lack crucial, specific data and information about your business that you have to provide in order to get an accurate and relevant answer to your prompts.

If you don’t provide the relevant context to a model, it won’t solve your problem accurately. It’ll tell you something but it’ll be generic and inaccurate.

Understanding context goes beyond understanding the immediate meaning of words and involves capturing the relationships between ideas, the flow of discourse, and the subtleties that shape meaning over multiple interactions.

This means that the model must recognize details from previous exchanges or documents to maintain a consistent narrative or accurate response.

If you’re asking o1, o3, or 5.2 to solve a problem, and you don’t give it the relevant context, you won’t get a good answer back.

Complexity Handling

Complex reasoning requires engaging in multi-step deductions, managing details, and weighing conflicting pieces of information.

Fields like legal reasoning, medical diagnostics, and scientific research rely on a multi-layered approach that integrates facts, probabilities, and hypotheses sequentially.

A business is often less complicated and complex than scientific fields, which is good news for us—these models sometimes struggle with doing complex mathematical calculations (not the very simple math a business uses) and can discover new protein folds but often miss.

You’re probably not running a business that solves all the hard problems of the universe.

But, you can ask a Reasoning Model to solve a multi-step question based on clues scattered across various documents or data sources, and it’ll perform pretty well.

To find the correct solution, the model must interpret each piece of information accurately and deduce relationships among them. In other words, it needs to handle and manage complexity.

A Reasoning Model basically engages in layered thinking—a process of deducing, verifying, and integrating conclusions step-by-step.

This approach is especially relevant when multiple plausible solutions exist, requiring the model to balance contextual cues and probabilities to find the most likely answer.

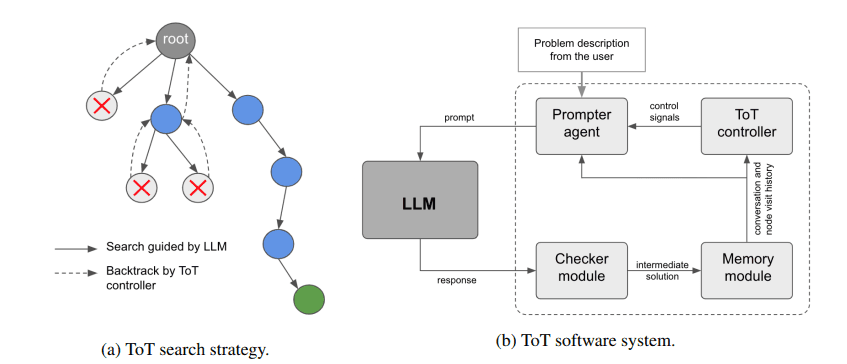

A good visual to help illustrate this:

Source: Large Language Models as an Indirect Reasoner

Hybrid Thinking

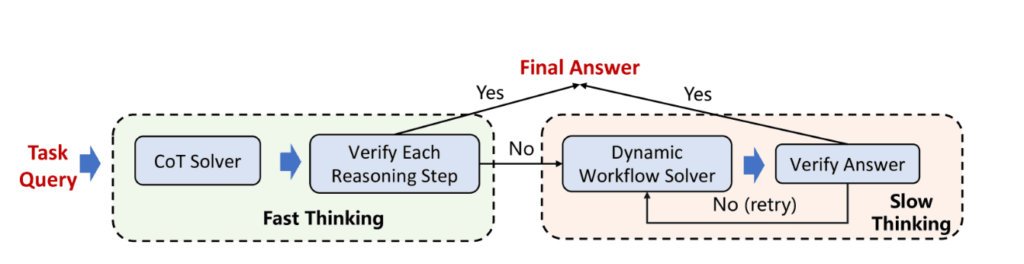

Frameworks like hybrid thinking models have been developed to improve performance in reasoning tasks.

Basically, models dynamically switch between fast (intuitive) and slow (deliberate) reasoning modes based on task difficulty, supposedly enabling the model to adjust its analytical depth according to complexity.

Here’s a visual overview of this approach:

Source: HDFlow: Enhancing LLM Complex Problem-Solving with Hybrid Thinking and Dynamic Workflows

It’s not clear yet whether this makes a big difference but it’s an interesting capability. As a human, I do hybrid thinking all the time (you probably do, as well). When I try to solve a problem, there’s always an intuitive aspect and exploration, often followed by more deliberate, slower reasoning. And you go back-and-forth in these different modes.

Now, let’s look at different types of reasoning.

3. The Five Types of Reasoning In Your Business

LLM reasoning covers several distinct types, each contributing to the model’s ability to interpret, deduce, and infer meaning effectively.

Researchers and developers can strategically design (or prompt) models for specific applications and problem-solving needs by categorizing these reasoning types.

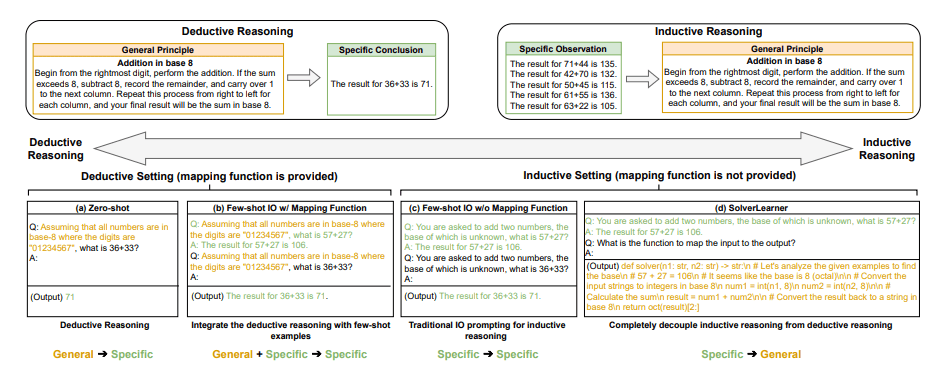

This illustration highlights deductive versus inductive reasoning methods:

Source: Inductive or Deductive? Rethinking the Fundamental Reasoning Abilities of LLMs

Each reasoning type contributes to the overall performance and accuracy, and each type also helps the models handle complex queries, process logic, and even tackle abstract thinking.

You, as a human, use these types of reasoning, too. And if you’re not doing so strategically yet, you should get started today.

Deductive Reasoning

Deductive reasoning works by taking general rules we already know and applying them to specific situations. It’s like using a rulebook to figure out what should happen in a particular case.

For example, when working with structured knowledge tasks, deductive reasoning helps models stay logically consistent and reach conclusions based on established facts.

Let’s look at a business application example:

An ecommerce company has a policy that “all products with less than 10% profit margin should be discontinued.”

After analyzing sales data, they identify that Product X has only a 7% profit margin. Using deductive reasoning, they conclude that Product X should be discontinued according to their policy.

This reasoning helps standardize inventory decisions across the organization, and eliminates underperforming products.

Now imagine if you had, let’s say, an Agent that uses a Reasoning Model continually scan your sales data and apply deductive reasoning to it all—and tell you what products to discontinue, what products to keep, or even suggest how you can increase profit margins for products that are in some kind of “danger zone”.

Do you see how powerful that could be?

Inductive Reasoning

Inductive reasoning lets models make general conclusions based on patterns they observe. Unlike deductive reasoning (which follows strict rules), inductive reasoning makes educated guesses about new information based on past observations.

This type of reasoning works well in changing environments with lots of data where rules aren’t clearly defined. However, the conclusions may be less reliable because they’re based on probability rather than certainty.

Another business example:

An email marketing team notices that their last five email campaigns with emoji-filled subject lines had 25-30% higher open rates than campaigns without emojis.

Using inductive reasoning, they conclude that “emoji-filled subject lines generally increase open rates” and create a new guideline to include emojis in future campaigns.

This approach helps identify successful marketing strategies, improves customer engagement metrics, and allows the team to adapt quickly to changing consumer preferences without knowing exactly why emojis work better.

Now imagine if you had an Agent with a Reasoning Model run this kind of analysis, continually, and giving you recommendations every week or month.

Abductive Reasoning

Abductive reasoning tries to find the most likely explanation for a set of observations. It’s especially useful when working with uncertain or incomplete information.

Instead of using strict rules or labeled data, it focuses on finding plausible explanations that best match the available evidence.

Business example:

A software company notices a sudden 30% drop in user engagement with their app. After reviewing recent changes, they hypothesize that the most likely explanation is a bug introduced in the latest update that makes certain features harder to access.

While there could be other causes (like a competitor launching a better product or seasonal usage patterns), this abductive reasoning helps them prioritize bug-fixing resources.

This approach allows the company to respond quickly to problems, formulate testable hypotheses, and begin addressing issues before having complete information.

What if you had an Agent with a Reasoning Model perform tasks like this, 24/7?

Are you starting to see it? Most reasoning and thinking tasks that you would ask a human to do, can now be given to Reasoning Models to perform.

Analogical Reasoning

Analogical reasoning helps models find similarities between different concepts, contexts, or scenarios. This allows them to make inferences or generate insights based on these similar relationships.

By drawing parallels, a model can use what it knows about one situation to understand another, even when the surface details are different.

To make it practical with an example:

A startup facing challenges with customer acquisition notices similarities between their situation and how streaming services initially struggled before implementing freemium models.

Using analogical reasoning, they apply the lessons from the streaming industry to their own business, creating a free tier with premium upgrades.

This reasoning approach helps the company find innovative solutions by transferring successful strategies across industries, overcome “we’re different” thinking, and benefit from others’ experiences without repeating their mistakes.

Again I ask, can you see how an Agent with a Reasoning Model could help you discover opportunities like these? What if you had one running every day and its only job was to notify you of opportunities, every week?

Practical Reasoning

Practical reasoning involves recommending actions based on understanding a user’s goals, limitations, and possible outcomes.

This approach mimics how humans make decisions: considering various options, weighing potential consequences, and choosing actions that align with objectives.

Business Example:

A manufacturing company needs to decide whether to invest in new automation technology.

Using practical reasoning, they consider their goals (increased production, reduced costs), constraints (limited budget, staff training needs), and potential outcomes (improved efficiency but initial production delays).

They ultimately decide to implement the technology in phases rather than all at once.

This reasoning approach helps the company make balanced decisions that consider multiple factors, develop actionable implementation plans, and achieve realistic outcomes given their specific situation.

An Agent with a Reasoning Model could help you think through and reason your way to better decisions.

Do you see how having a strategic thinking partner, in the form of a Reason Model, could help you not only understand your business better, but also make better decisions?

Let’s look at a few more ways you can strategically use Reasoning Models inside your business.

4. Using Reasoning Models In Your Business

Let’s explore how they can help your business through different types of reasoning and capabilities.

Content Marketing and Copywriting

- Content Planning: Using inductive reasoning, Reasoning Models can identify patterns in successful content and suggest new blog topics or campaign themes. For example, a digital agency could input past engagement data, and the Reasoning Model would infer which topics consistently perform well, explaining why each suggestion should work based on observed patterns. The Reasoning Model applies complexity handling to balance seasonal trends, audience preferences, and business goals when creating content calendars.

- Writing First Drafts: Through deductive reasoning, Reasoning Models can structure content logically from general principles to specific points. A software company might provide industry reports and key messages to the Reasoning Model, which then creates a whitepaper with clear sections that follow a logical flow—from problem statement to solution. The Reasoning Model uses inference capabilities to bridge information gaps and make connections between ideas that weren’t explicitly stated.

- SEO Optimization: Using analogical reasoning, Reasoning Models can apply successful keyword strategies from one content piece to another while maintaining natural language. An eco-friendly store could provide their top-performing page as an example, and the Reasoning Model would analyze why certain keywords work well in that context before applying similar patterns to new content. The Reasoning Model’s context understanding ensures keywords fit naturally rather than appearing forced.

- Editing Help: With abductive reasoning, Reasoning Models can identify the most likely problems in content and suggest improvements. When reviewing a long article, it might notice that certain paragraphs weaken the argument and hypothesize: “This section likely confuses readers because it contradicts an earlier point.” This practical problem-solving approach helps content teams produce clearer, more persuasive material with fewer revisions.

Advertising and Campaigns

- Ad Copy Creation: Using practical reasoning, Reasoning Models weigh various messaging approaches based on product attributes and audience goals. An e-commerce brand might provide product specifications and target audience profiles, and the Reasoning Model would create multiple ad versions highlighting different benefits—one emphasizing durability for practical buyers, another highlighting aesthetics for design-conscious customers. The Reasoning Model applies inference to determine which product features would matter most to different segments.

- Audience Targeting: Through analogical reasoning, Reasoning Models can transfer insights about one audience to understand another similar group. A marketing agency could explain their success with one demographic, and the Reasoning Model would identify which elements of that approach might work for a different but related audience. The model handles complexity by balancing multiple variables (age, interests, behaviors) to create truly differentiated messaging.

- Campaign Analysis: Employing abductive reasoning, Reasoning Models examine campaign performance data to determine the most likely explanations for success or failure. Given metrics on various ads, it might conclude: “Ad set A likely underperformed because the messaging focused on features rather than benefits, while your audience historically engages more with benefit-focused content.” This combines context understanding with inference to provide actionable insights.

Workflow Automation

- Customer Support: Using deductive reasoning combined with problem-solving capabilities, Reasoning Models can work through customer issues step-by-step following logical rules. When a customer describes a technical problem, the Reasoning Model applies general troubleshooting principles to their specific situation, walking through solutions in a systematic way. The Reasoning Model’s hybrid thinking allows it to quickly handle common questions while switching to more deliberate analysis for complex or unusual problems.

- Task Management: Through abductive reasoning, Reasoning Models can infer priorities and deadlines from email content even when not explicitly stated. An agency might have a Reasoning Model review client communications, where it recognizes phrases like “would be great to have by next week” as implied deadlines and organizes tasks accordingly. This context understanding helps create more accurate priority lists than simple keyword scanning.

- Workflow Integration: Employing practical reasoning, Reasoning Models make contextually appropriate decisions in automated systems. In an e-commerce operation, the Reasoning Model might notice low inventory for a seasonal product and, considering historical sales data and upcoming holidays, determine whether to trigger reordering immediately or wait. This complexity handling allows for more nuanced automation than simple if-then rules.

Data Analysis and Decision Support

- Finding Patterns: Using inductive reasoning and inference capabilities, Reasoning Models examine raw data to identify meaningful trends. A software company provides user behavior logs to the Reasoning Model, which might determine: “Users who engage with feature X in their first week have 60% higher retention rates, suggesting this feature delivers early value.” The Reasoning Model can handle complexity by considering multiple variables simultaneously (time of day, user demographics, feature interactions) to find non-obvious patterns.

- Explaining and Predicting: Through abductive reasoning, Reasoning Models generate the most plausible explanations for business events. When an e-commerce company sees a spike in sales for a previously slow-moving product, the Reasoning Model might connect this to a recent influencer mention by analyzing timing and referral data. The context understanding allows it to consider factors the business might not have thought to check.

- Creating Reports: Using deductive reasoning combined with practical reasoning, Reasoning Models can structure information logically while focusing on business-relevant conclusions. A startup CEO might provide various metrics to the Reasoning Model, which organizes them into a coherent narrative that follows from general performance to specific insights. The hybrid thinking capabilities allow it to handle both straightforward data reporting and more nuanced strategic implications.

- Decision Support: With analogical reasoning and problem-solving capabilities, Reasoning Models can compare current decisions to similar past scenarios. A product team deciding between features might describe their situation to the Reasoning Model, which identifies parallel decisions other companies have faced and the outcomes that followed. This context-rich approach helps teams see beyond their immediate perspective to consider proven patterns from other situations.

5. Getting Started with Implementation

To help you get started thinking through how to do this, here are a few best practices to follow.

- Set Clear Goals: Define exactly what you want the Reasoning Model to do in your workflow.

- Understand Limitations: Remember that Reasoning Models can sound confident but still make mistakes, especially on factual details.

- Keep Humans Involved: Use Reasoning Models to assist, not replace, human expertise. Have people review content generated by Reasoning Models.

- Provide Context: Give the Reasoning Model relevant information, guidelines, and data to work with. Without proper context, even the best reasoning models will produce generic, inaccurate results.

- Refine Your Approach: Treat initial outputs as drafts and improve your instructions as needed.

- Verify Information: Always fact-check the Reasoning Model’s output, especially for public content or important decisions.

- Monitor Costs and Value: Track API usage and make sure the time savings justify the expense.

Are you starting to see how Reasoning Models can be strategically used in your business?

It’s very much like having a strategic advisor that can help you think through situations, solve problems, provide recommendations from analyses, and so on.

The strategic thinking and use dictates tactical implementation.

Frankly, as interesting as tactics may be, with AI the only thing that will last is your ability to think and act strategically. Tactics come and go. Strategic thinking remains.